Vision Features

When building a model, Machine Learning Engineers use a data observation's features in performing either a classification or a regression task. Take for instance, suppose we are looking to predict the price of a house, we'll need attributes such as its number of bedrooms, size of its garage, size of its living room etc, these attributes are features of said house which could potentially tell us about its price and therefore will be used to build a regression model.

However, in the context of computer vision, features might not appear as clearly as they do in tabular data. In this blog post I explained how an image is basically an array of numbers, or a table of numbers if you prefer to see it that way, complete with rows and columns. We can't consider all these numbers as features because images often contain noise ie objects in the background plus the object of interest typically isn't the only object within frame.

Extracting Features In Computer Vision

Therefore, the challenge here is to identify features specific to the object of interest and eliminate to a reasonable degree, noisy or non-essential pixels. To do this a technique called convolution is used to detect the edges or outline of the object and 'black out' noisy pixels. Pixels of the detected edges can then be said to be features of a given image.

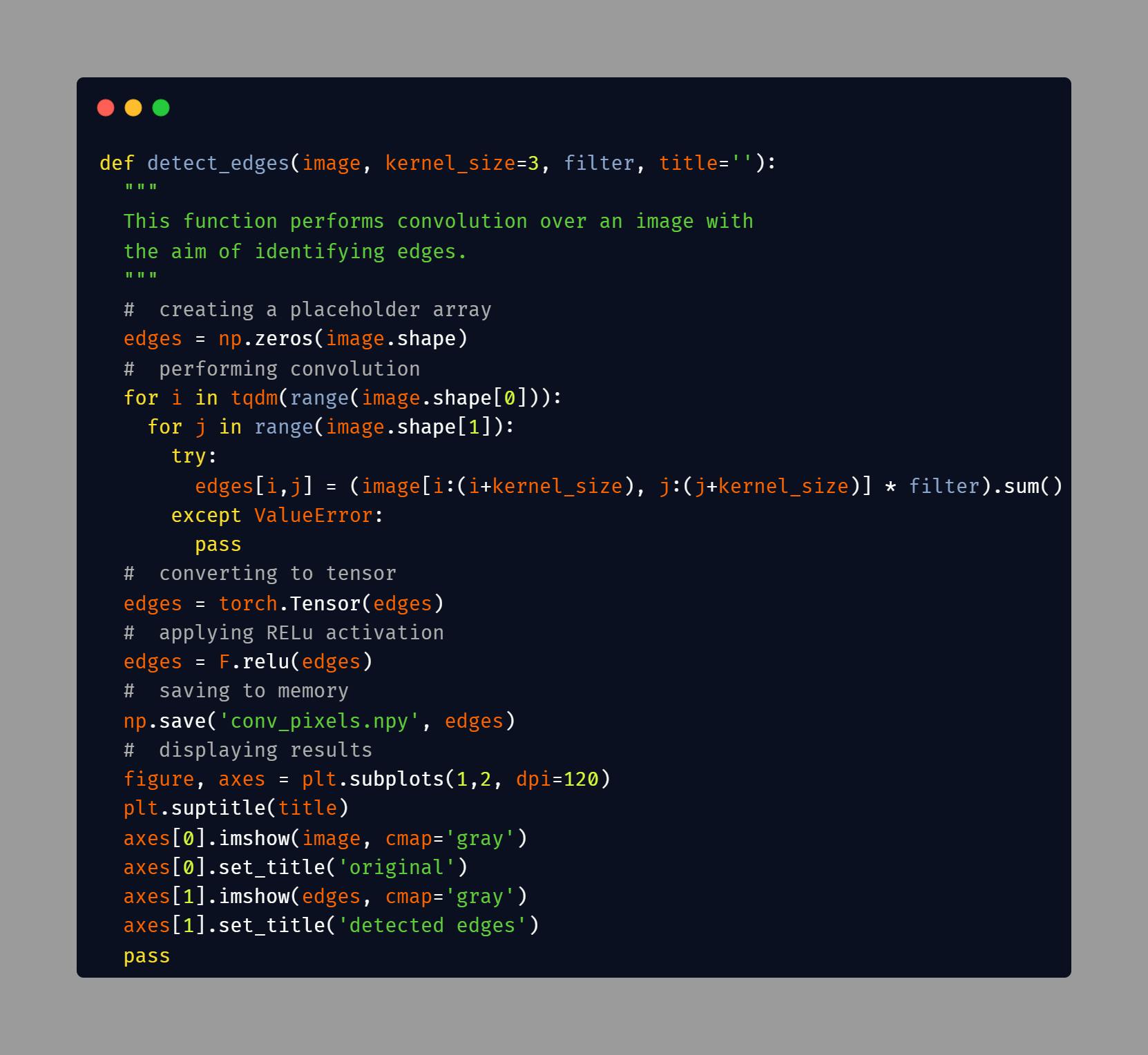

The following code replicates a typical convolution process. In this process a filter, which is typically a small matrix of numbers, is applied to sequential patches of an image. An element wise product followed by a sum is computed and the result is returned as a distinct pixel in a feature map.

Filters

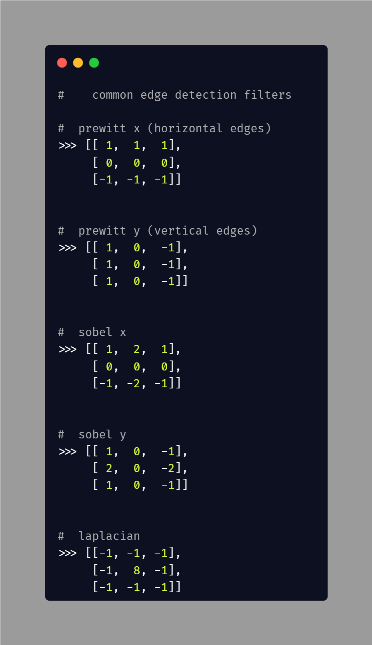

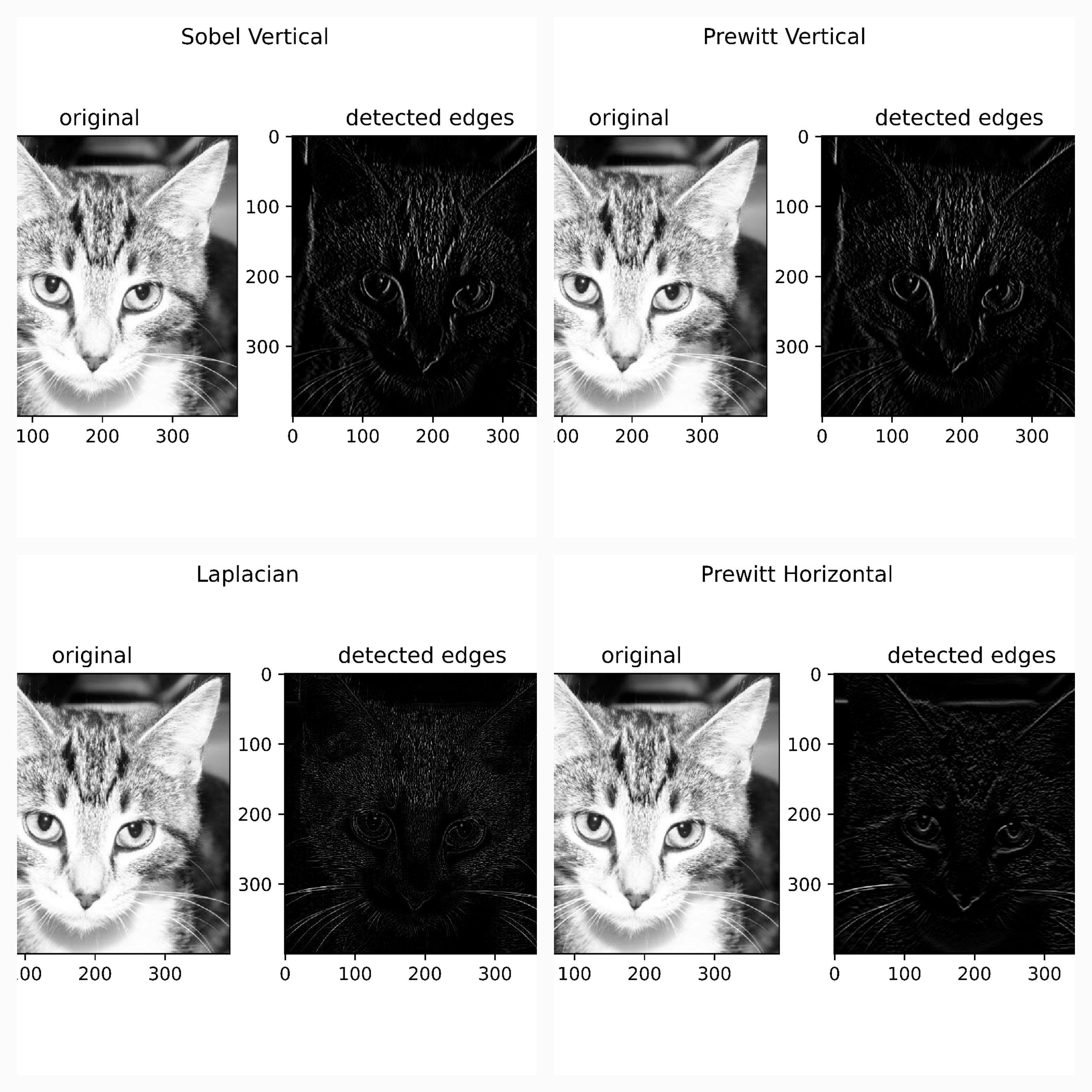

The kind of feature map produced after convolution is dependent on the kind of filters used. Some filters are suited to detecting edges while some are suited to blurring images. Simple edge detection filters are the Sobel, Prewitt and the Laplacian filters.

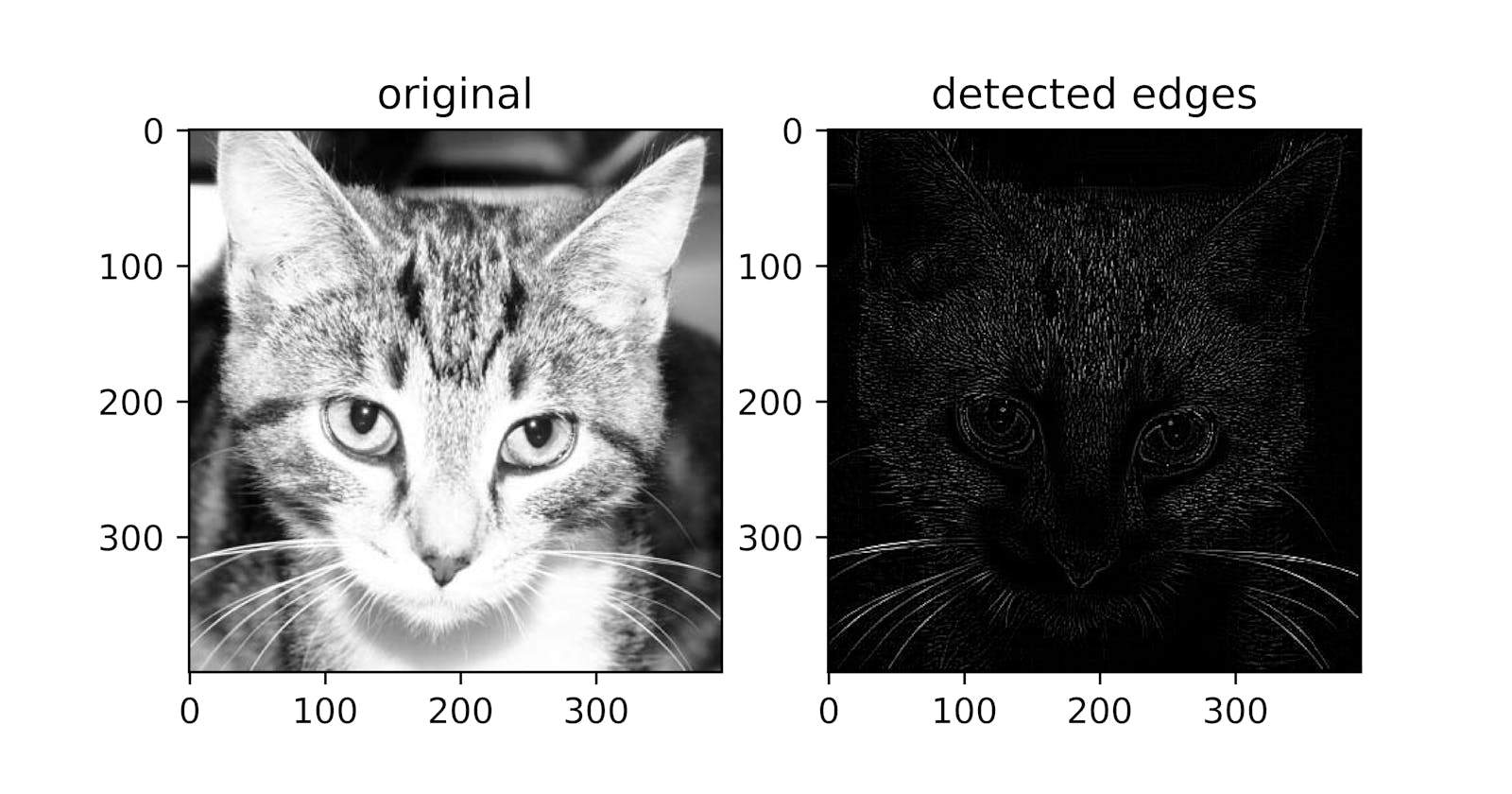

To demonstrate this, take the image of a cat below for instance, the object of interest here is the cat but there are some background elements which do not have anything to do with the cat. To extract features from this image we need to perform edge detection.

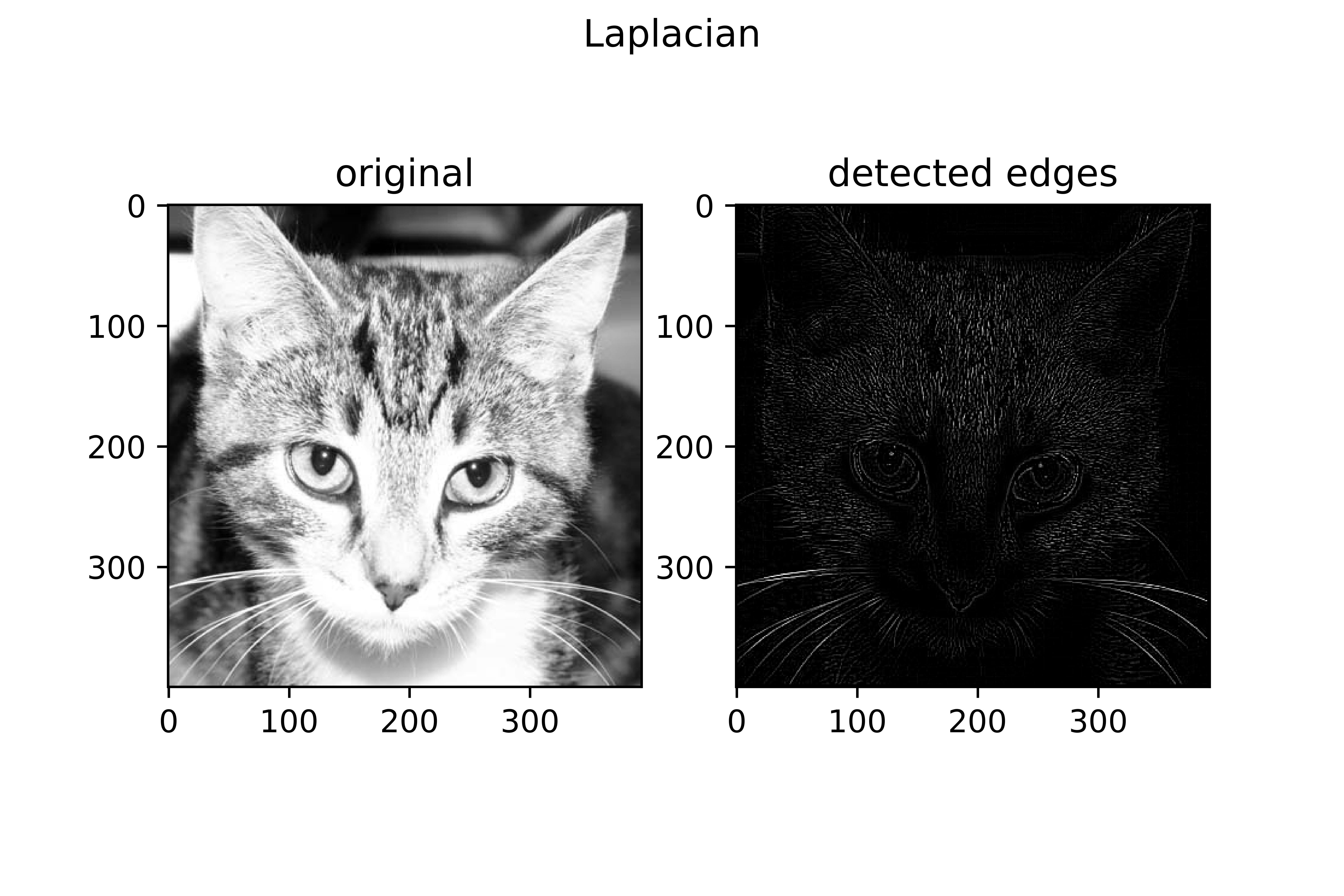

Using the Laplacian filter above yields an image of the cat with noise removed and the edges of the cat highlighted. These highlighted pixels are taken as features of the image.

The kind of feature/edges extracted depends on the kind of filters used. Here are some interesting edges using some of the filters above.

All of these form the building blocks of a Convolutional Neural Network, a neural network where the most efficient filters are learned from the data distribution via back-propagation, the very essence of vision models which allow computers to derive meaningful information from visual data.